I’ve pretty much asked the question in the Topic title! I’m looking at importing a very wide file with many columns and wonder if there is a limit. I can look at breaking it up into separate tables if need be, just seems silly when it’s already together. Thank you.

And the reason I’ve asked is I’ve loaded a file into a table but it appears to wrap and many columns are appearing as data underneath other column titles. Which leads me to believe there is a limit.

make sure your file is actually saved as .csv and check if any of the headings contain commas or full stops. Also the field mapping and type need to be defined properly…Just sharing what i noticed from my past mistakes.

Like @Shumon is saying.

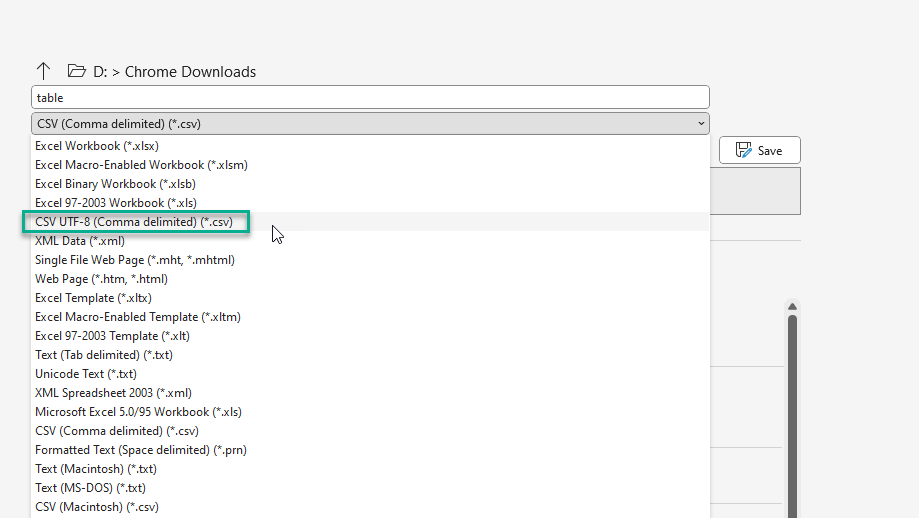

I’d also say you should try opening the CSV in notepad and make sure there it’s formatted correctly. One more important item is to ensure its formatted as UTF-8.

If you open in Excel you can see the UTF-8 option:

Thanks for all the responses. There aren’t commas etc in the titles (or in the content) as this data has come straight from SQL Server as a csv (and I know the data like the back of my hand). Seeing as nobody is suggesting there is a column limit, it is (most) likely to do with the UTF-8 option, so I will look into that. Thank you.

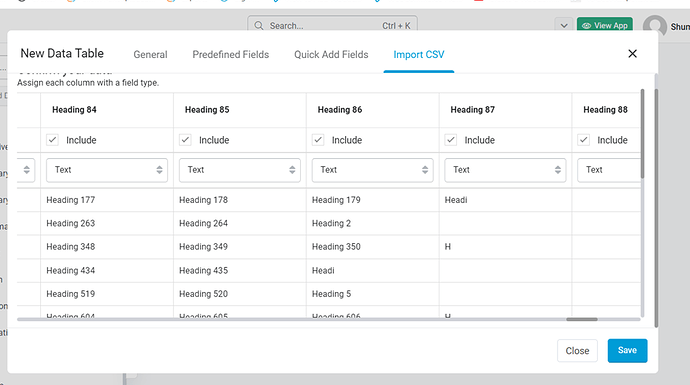

One test containing 2000 headings shows failure begins at column 86 and rows show heading names prior to that point. Screenshot below

You can try creating the tb table with the required table fields first and then importing your csv file into the table.

Thanks Shumon. I knew I wasn’t going mad. Knack have uploaded them no problem and there are a couple of hundred columns so no way I’m creating them manually. I guess I’ll go elsewhere as clearly tadabase is not for me for this project. Thanks again.

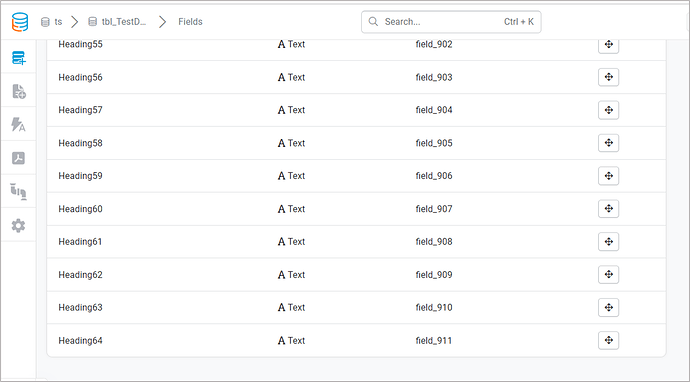

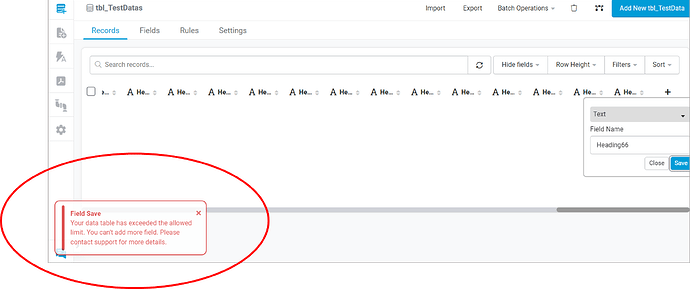

64 appears to be the limit. I tried to manually add more fields and got a error msg. see screenshot below.

wait for tb staff response before you go elsewhere, they might be able to do something for you.

Field limits are actually more complex than a hard coded limit. It’s a SQL limitation.

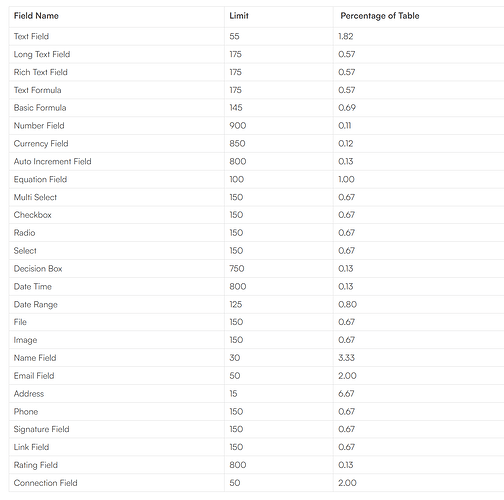

It depends on the field type. Some fields also have additional columns that get added so it uses more fields than meets the eye. For example, address fields have address1, address2, city, state zip etc.

You’d be able to add about 800 Number fields but only about 55 regular text fields.

You can see here a breakdown of the field limits:

@jonw perhaps you can try doing it in 2 or 3 stages. Its been a while for me so apologies in advance if I am talking nonsense… im guessing you got your records in a flat file produced by a View from a SQL Server DTS. Why not go up a layer or two in the data model and re-produce the tables.

Once again, sorry if I am talking nonsense, im sure you would have already done that if it was a solution.

I appreciate your efforts but it’s not feasible. The data doesn’t exist in earlier stages and has been intentionally created as a wide table as the data is only relevant and useable at the atomic level. There are no groups or hierarchies that would help split it up. Also why would I even attempt to split it all up when I have a solution that can handle the data? Anyhow, I’ve moved on so thanks anyway.